Importer Configuration

The importer is an application used to extract the project from the cdo server database to a local folder. It produces as many zip files as modeling projects. It can also be used to import the user profiles model.

The importer also extracts information from the CDO Commit history to produce a representation of the activity made on the repository. This information is denominated Activity metadata. See help chapter The commit history view and Commit description preferences for a complete explanation. By default, the importer will extract Activity Metadata for every commit in the repository. Be aware that the parameter -projectName has no impact on this feature. It will also export commits that do not impact the selected project. Still, it is possible to specify a range of commits using the parameters -to and -from.

1. Importer strategies

Several import strategies are supported by the Importer application:

-

Connected import: the Importer application establishes a connection to the targeted repository and imports the models.

-

This is the default strategy of the Importer application.

-

Credentials might be required if the server has been configured to use identification, authentication or user profiles, see Server Configuration job documentation.

-

-

Offline import: This mode allows performing the import based on a snapshot of the targeted repository.

-

There is no connection to the server and no interaction with other users: no credentials are required for the Importer application.

-

It also avoids overloading the server and can be done in a separate environment.

-

It can be enabled with the use of the

-importFilePathparameter. Refer to this parameter documentation in the next section for more details. -

A snapshot is an XML extraction of the repository. It can be manually obtained by executing the

cdo exportcommand on the server osgi console.

-

-

Snapshot import: the Importer application sends a snapshot creation command to the server, then it uses the created snapshot to perform an Offline Import.

-

This is the strategy used by the Project - Import Scheduler job.

-

Since the XML extraction is more efficient than the Connected import, this option keeps most of the benefit of the simple Offline import.

-

It can be enabled with the use of the

-cdoExport trueparameter alongside with-importFilePathwhich define where to create and then consume the snapshot. -

Note: With this strategy, a lock preventing any commit from connected users is acquired. During the time of the snapshot execution, it is not possible for connected users to commit their changes. The lock is released once the snapshot is over. If the lock cannot be acquired (after three attempts), the import is abandoned. The attempt number (three by default) can be overridden through a system property. For instance, to replace the number of attempts by two:

-Dcom.thalesgroup.mde.melody.importer.maxAttemptsCdoExport=2

-

See also Projects — Import job documentation.

2. Importer parameters

|

Importer.bat file uses -vmargs as a standard eclipse parameter. Eclipse parameters that are used by importer.bat override the value defined in capella.ini file. So if you want to change a system property existing in capella.ini (-vmargs -Xmx3000m for example) do not forget to do the same change in importer.bat. |

The importer needs credentials to connect to the CDO server if the server has been started with authentication or user profile.

Credentials can be provided using either -repositoryCredentials or -repositoryLogin and -repositoryPassword parameters.

Credentials are required only for Connected import (see the Importer strategies section above for more details).

Here is a list of arguments that can be set to the Importer (in importer.bat or in a launch config):

| Arguments | Description |

|---|---|

-repositoryCredentials |

Login and password can be provided using a credentials file. It is the recommended way for confidentiality reason. If the credentials file does not contain any password, the password will be searched in the eclipse secure storage. See how to set the password in the secure storage This parameter must not be used with To use this property file

aLogin:aPassword Note: Credentials are required only for Connected import (see the Importer strategies section above for more details). |

-repositoryLogin |

The importer needs a login in order to connect to the CDO server if the server has been started with authentication or user profile.

Note: Credentials are required only for Connected import (see the Importer strategies section above for more details). |

-repositoryPassword |

This parameter is used to provide a password to the importer accordingly to the login. If Warning: some special characters like double-quote might not be properly handled when passed in argument of the importer. The recommended way to provide credentials is through the repositoryCredentials file or the secure storage. Note: Credentials are required only for Connected import (see the Importer strategies section above for more details). |

-hostname |

Define the team server hostname (default: localhost). |

-port |

Define the team server port (default: 2036). |

-connectionType |

The connection kind can be set to tcp or ssl (keep it in low-case) (default: tcp) |

-httpLogin |

Importer application will trigger an Http request. This argument allows giving a login to identify with on the Jetty server. |

-httpPassword |

Importer application will trigger an Http request. This argument allows giving a password to authenticate with on the Jetty server. |

-httpPort |

Importer application will trigger an Http request. This argument allows giving a port to communicate with on the Jetty server. |

-httpsConnection |

Importer application will trigger an Http request. This boolean argument specifies if the connection should be Https or Http. |

-importType |

The backup is available in five different modes:

(default: PROJECT_AND_COMMIT_HISTORY) |

-repoName |

Define the team server repository name (default: repoCapella). |

-projectName |

By default, all projects are imported, i.e. with default value "*" (with the right -importType parameter). Argument "-projectName X" can be used to import only project X. Encoded and decoded names are both accepted (e.g. your can use either -projectName "Prj A", -projectName Prj%20A or -projectName "Prj%20A") (default: *). |

-runEvery |

Import every x minutes (default -1: disabled). |

-outputFolder |

Define the folder where to import projects (default: workspace). |

-logFolder |

Define the folder where to save logs (default: -outputFolder). |

-archiveProject |

Define if the project should be zipped (default: true). Each project will be zipped in a separate archived suffixed with the date. Some additional archives can also be created:

Note: Some library resources may not be referenced by the current project and so not included in the zip. |

-overrideExistingProject |

If the output folder already contains a project with the same name, this argument allows removing this existing project. |

-closeServerOnFailure |

Ask to close the server on project import failure (default: false). If the server hosts several repositories, it is better to use the parameter -stopRepositoryOnFailure. |

-stopRepositoryOnFailure |

Ask to stop the repository on project import failure (default: false).

|

-backupDBOnFailure |

Backup the server database on project import failure (default: true). |

-checkSize |

Check project zip file size in Ko under which the import of this project fails (default: -1(no check)). |

-checkSession |

Do some checks and log information about each imported project (default: true).

|

-errorOnInvalidCDOUri |

Raise an error on cdo uri consistency check (default: true). |

-addTimestampToResultFile |

Add a time stamp to result files name (.zip, logs, commit history) (default: true). |

-optimizedImportPolicy |

This option is no longer available since 1.1.2. |

-maxRefreshAttemptBeforeFailure |

The max number of refresh attempt before failing (default: 10). If the number of attempts is reached, the import of a project will fail, but as this is due to the activity of remote users on the model, this specific failure will not close the repository or the server even with "-stopRepositoryOnFailure" or "-closeserveronfailure" set to true. |

-timeout |

Session timeout used in ms (default: 60000). |

-importImages |

Define which images should be imported. Possible values are 'ALL', 'USED', 'NONE'. (default : ALL). |

-checkout |

The timestamp specifying the date corresponding to the state of the projects that will be imported (refer to the following note for details on the accepted formats). If empty, the framework will connect on the repository with a transaction on the repository head (current state). When this parameter is used, the framework will open a read-only view on the repository at the given time instead of a transaction on repository head. This option is meaningful only if -importType is one of ALL, PROJECT_ONLY, SECURITY_ONLY or PROJECT_AND_COMMIT_HISTORY. |

-from |

The timestamp specifying the date from when the metadata will be imported (refer to the following note for details on the accepted formats). If omitted, it imports from the first commit of the repository. The timestamp can also be computed from an '‹Activity Metadata›' model. In that case, this parameter could either be an URL or a path in the file system to the location of the model. If the date corresponds to a commit, this commit is included. Otherwise the framework selects the closest commit following this date. In the case of using a previous activity metadata, the last commit of the previous import is also included. |

-to |

The timestamp specifying the latest commit used to imported metadata (refer to the following note for details on the accepted formats). If omitted, it imports to the last commit of the repository. The framework selects the closest commit preceding this date. ''Be careful: this parameter only impacts the range of commit for importing activity metadata from the CDO server. Using this parameter will not import the version of the model defined by the given date. See -checkout argument for that purpose.'' |

-squashCommitHistory |

Squash consecutive commits done by the same user with the same description (default: true). |

-importCommitHistoryAsText |

Import commit history in a text file using a textual syntax (default: false). The file has the same path as the commit history model file, but with txt as extension. |

-importCommitHistoryAsJson |

Import commit history in a json file format (default: false). The file has the same path as the commit history model file, but with json as extension. |

-includeCommitHistoryChanges |

Import the commit history detailed changes for each commit done by a user with one of the save actions (default: false). The changes of commits done by wizards, actions and command line tools are not computed, those commits have a description which begins by specific tags like [Export], [Delete], [Maintenance], [User Profile], [Import], [Dump]. This option is applied for all kinds of commit history exports (xmi, text or json files). Warning about the importer performance: if this parameter is set to true, the importer might take more time, particularly if the history of commits is long. |

-computeImpactedRepresentationsForCommitHistoryChanges |

Compute the impacted representations while exporting changes (default: false). Warning about the importer performance: if this parameter is set to true, the importer might take more time, particularly if the history of commits is long. For each commit with changes to export, it will compute the impacted representations. |

-importFilePath |

This option allows performing the import based on an XML or binary extraction of the repository. It is mandatory for Offline and Snapshot imports, see the Importer strategies section for more details. It is recommended to provide an absolute path. A path ending with ".bin" will trigger the binary load, other extensions will trigger the XML load. Some arguments related to the server connection will be ignored. Only the arguments -outputFolder and -repoName are mandatory. |

-XMLImportFilePath |

(deprecated) see -importFilePath |

-cdoExport |

This option allows sending a snapshot creation command to the server before performing the import as described in the Importer strategies section. (default: false). The |

-archiveCdoExportResult |

This option defines if the XML file resulting from the cdo export command launched by the importer in intermediate step (if -cdoExport is true) should be zipped (default : false). If the option is true, the XML file zip is created in the "Output folder" (see -outputFolder documentation) and the XML file is then deleted. |

-eclipse.keyring |

This option specifies the location of the secure storage file used by Eclipse to store password and sensitive informations. The secure storage helps protect confidential data by encrypting it with a master key. By default, the secure storage file is located in the .eclipse folder of the user directory. You can change this location by using the For example, if you want to use the file |

-eclipse.password |

This option specifies the file path containing the master key password used by Eclipse to encrypt data in the secure storage. By default, the secure storage system uses a password provider mechanism to protect the master password used to encrypt data in the secure storage. If the For example, if you want to use the file This option can be used with |

-help |

Print help message. |

|

The timestamp arguments (checkout, from, to) must use the following formats:

For example, for the date 03/08/2017 10h14m28s453ms on a time zone +0100 use the argument "2017-08-03T10:14:28.453+0100". |

|

If the server has been started with user profile, the Importer needs to have write access to the whole repository (including the user profiles model). See Resource permission pattern examples section. If this recommendation is not followed, the Importer might not be able to correctly prepare the model (proxies and dangling references cleaning, …). This may lead to a failed import. |

|

The importer uses the default configuration of Capella and does not need its own configuration area. For this to work properly, the importer needs to have read/write permission to the configuration area of Capella, otherwise it can end up with some errors about access being denied. A common situation where the importer can be found in this situation is when the Scheduler is launched as a Windows service. In this case, the user account executing the service is not necessarily configured to have the read/write permission to Capella’s configuration area. If somehow you cannot give the read/write permission to the importer, a workaround is to provide it a dedicated configuration area by adding the following arguments at the end of importer.bat file: -Dosgi.configuration.area="path/to/importer/configuration/area" and if necessary, update the existing argument -data importer-workspace to point to a location with read/write permission. |

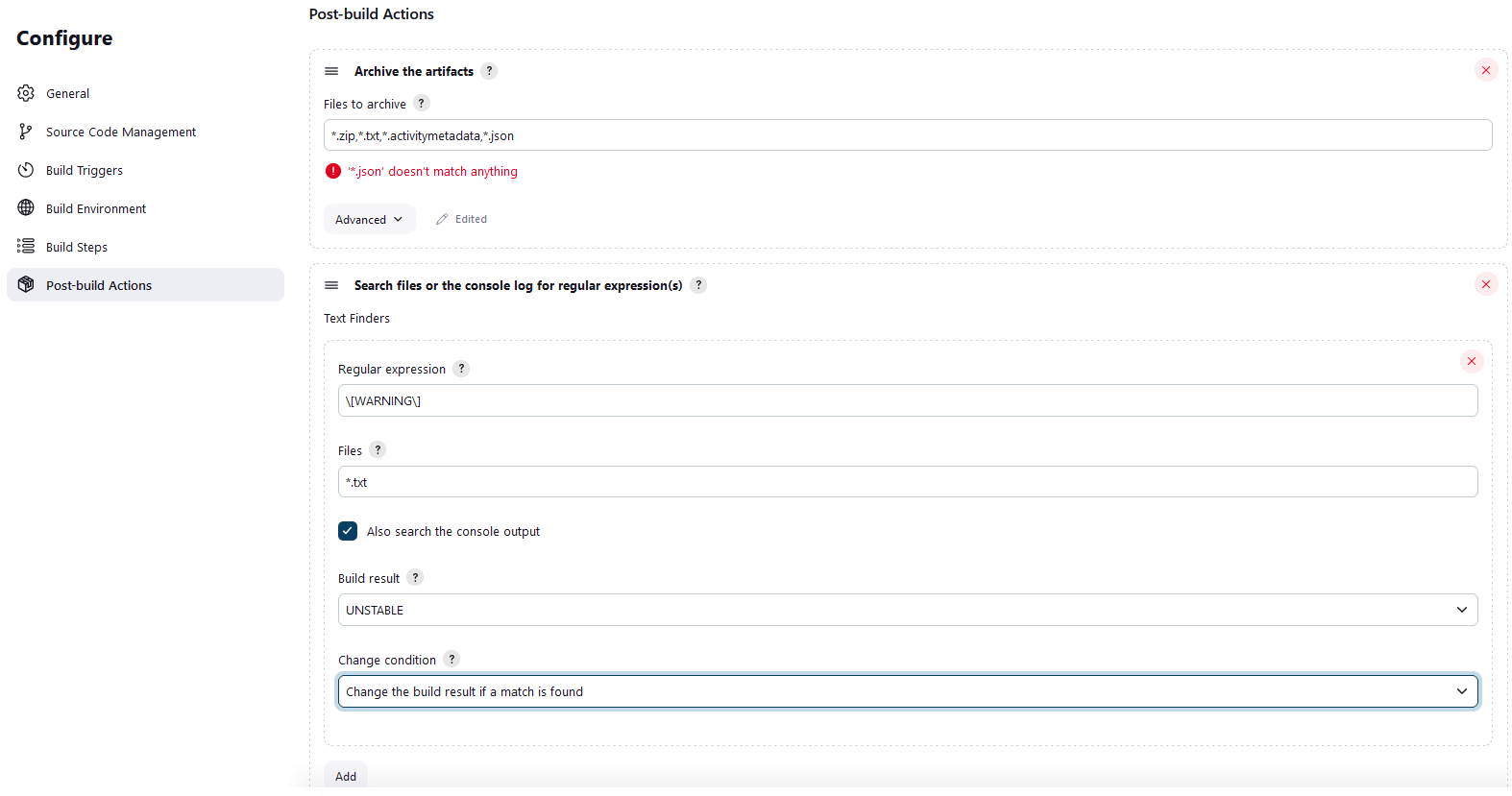

2.1. Jenkins Text Finder configuration

The job contains a post-action that verifies that the commit History metadata text file is generated with the parameter exportCommitHistory set to true by default:

If you change the parameter exportCommitHistory to false, the build will become unstable because of this configuration. So you should deactivate the option "Unstable if found" to avoid this warning that does not make sense with this parameter set to false. Don’t forget to set it back if you set the value to true again.

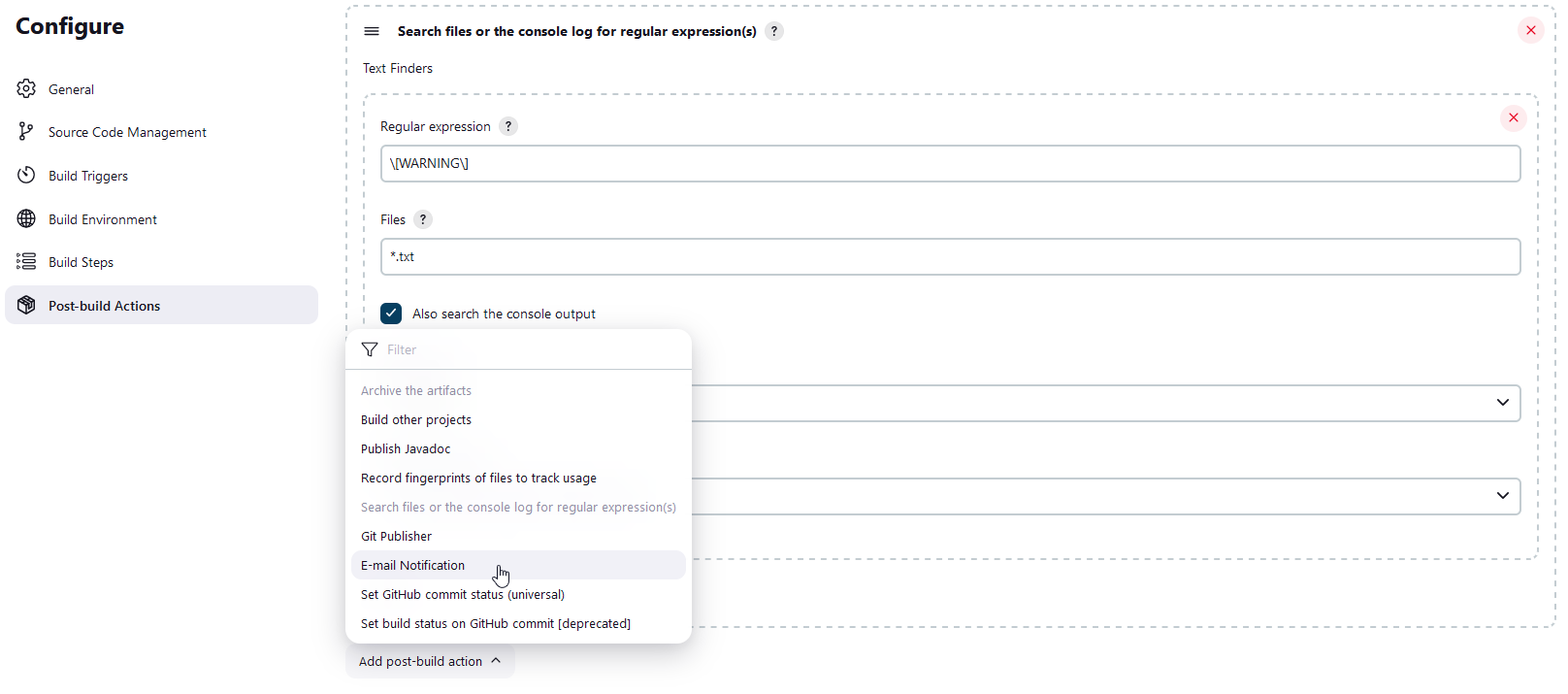

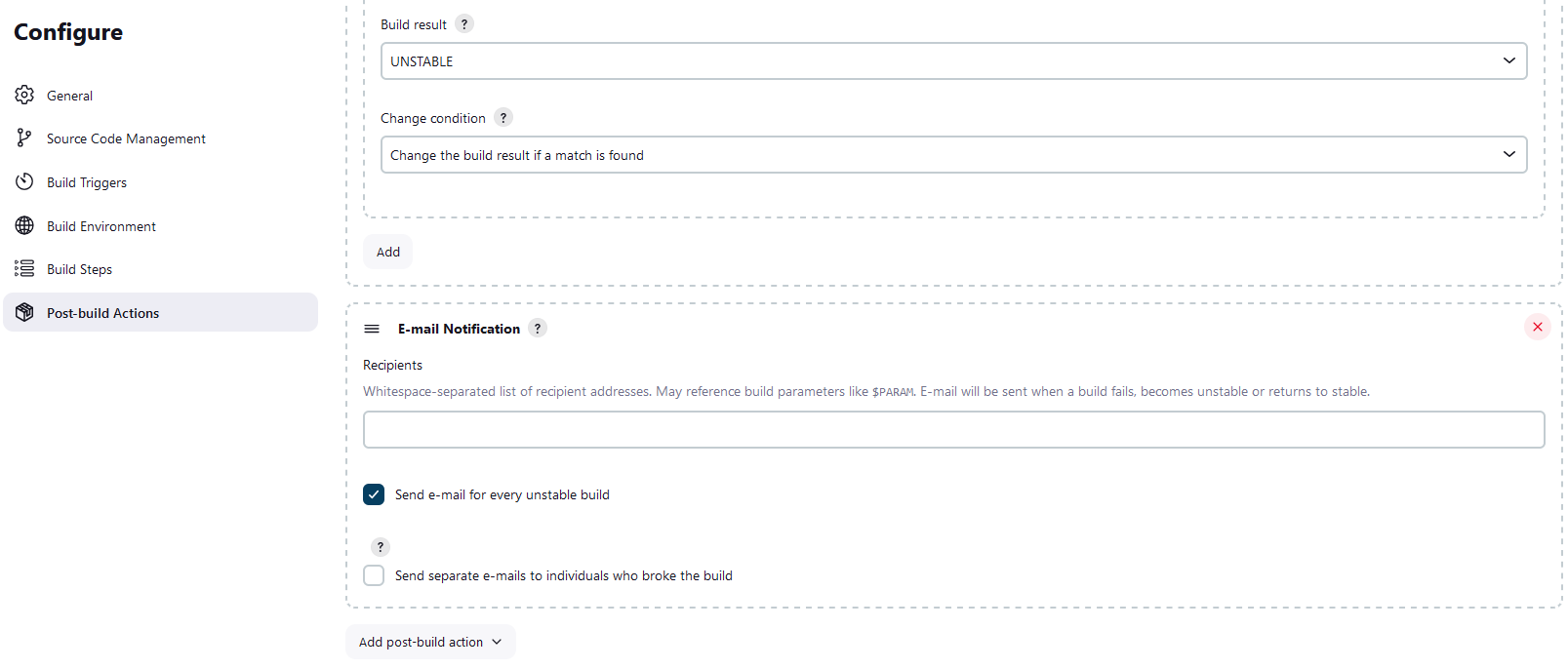

2.2. Add e-mail notification on failed backup

Thanks to the Jenkins Text Finder post-build action, if the logs of a build contain the text Warning, the build is marked as unstable (with a yellow icon). You can go further and be notified by email in that case. In the Project - Import configuration page, scroll down or select the tab Post-build Actions. There, click on the Add post-build action button and choose E-mail notification.

On this new action, you just need to add the e-mails to be notified in case of unstable build.

2.3. How to set the password in secure storage

The importer does not use the same credentials as the user. It is stored in a different entry in the Eclipse 'Secure Storage'. Storing and clearing the credentials requires a dedicated application that can be executed as an Eclipse Application or using a Jenkins job.

3. Examples

importer.bat -nosplash -data importer-workspace -closeServerOnFailure true -backupDbOnFailure true -outputFolder C:/TeamForCapella/capella/result -connectionType ssl -checkSize 10

importer.bat -nosplash -data importer-workspace -closeServerOnFailure false -backupDbOnFailure false -outputFolder C:/TeamForCapella/capella/result -connectionType ssl -checkSize -1 -importType SECURITY_ONLY